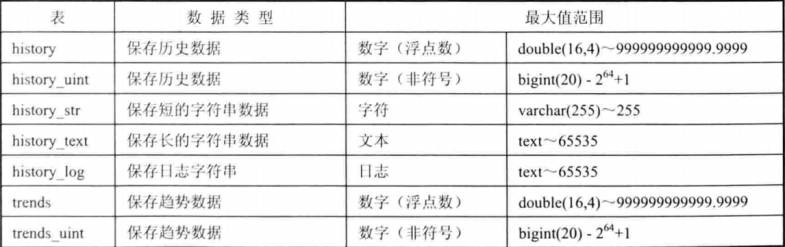

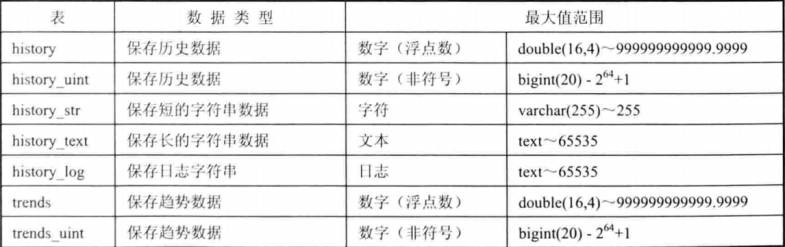

Zabbix MySQL Database Partitioning 表分区优化禁用 housekeeping 提升历史数据清理性能

Zabbix 默认会启用 housekeeping 功能用于清理 history/trend 等历史数据,当监控服务器数量增加,保留时间有要求的情况下,housekeeping 的清理策略就会造成 Zabbix Server 性能下降,比如查询历史监控数据等。Zabbix 官方的建议是直接在数据库按照时间唯独创建分区表并定时清理,好处自然就是减少 Zabbix Server 的负担提升 Zabbix 应用对于数据库读写性能。Zabbix 3.4 之后的版本增加了对 Elasticsearch 的支持。

数据库的优化有横向和纵向扩展,这里使用数据的分布式,而分表可以看做是分布式的一种。。

在zabbix_server.conf文件中,找到如下两个参数:

(1)HousekeepingFrequency=1 解释:多久删除一次数据库里面的过期数据(间隔时间),默认一小时

(2)MaxHousekeeperDelete=5000 解释:每次删除数据量的上线(最大删除量),默认5000

SELECT table_name AS "Tables",

round(((data_length + index_length) / 1024 / 1024), 2) "Size in MB"

FROM information_schema.TABLES

WHERE table_schema = 'zabbix'

ORDER BY (data_length + index_length) DESC;

+----------------------------+------------+

| Tables | Size in MB |

+----------------------------+------------+

| history_uint | 16452.17 |

| history | 3606.36 |

| history_str | 2435.03 |

| trends_uint | 722.48 |

| trends | 176.28 |

| history_text | 10.03 |

| alerts | 7.47 |

| items | 5.78 |

| triggers | 3.72 |

| events | 2.64 |

| images | 1.53 |

| items_applications | 0.70 |

| item_discovery | 0.58 |

| functions | 0.53 |

| event_recovery | 0.38 |

| item_preproc | 0.38 |

………………

通过以上可以看到,history_uint数据超过16G,随着数据越来越多,查询数据明显较慢,所以通过表分区的方式提升Zabbix操作MySQL的性能。

truncate table history;

optimize table history;

truncate table history_str;

optimize table history_str;

truncate table history_uint;

optimize table history_uint;

truncate table trends;

optimize table trends;

truncate table trends_uint;

optimize table trends_uint;

truncate table events;

optimize table events;

注意:这些命令会把zabbix所有的监控数据清空,只是清空监控数据,添加的主机,配置,拓扑图不会丢失。如果对监控数据比较看重的话注意备份数据库,truncate是删除了表,然后根据表结构重新建立。

vim partition.sql

DELIMITER $$

CREATE PROCEDURE `partition_create`(SCHEMANAME varchar(64), TABLENAME varchar(64), PARTITIONNAME varchar(64), CLOCK int)

BEGIN

/*

SCHEMANAME = The DB schema in which to make changes

TABLENAME = The table with partitions to potentially delete

PARTITIONNAME = The name of the partition to create

*/

/*

Verify that the partition does not already exist

*/

DECLARE RETROWS INT;

SELECT COUNT(1) INTO RETROWS

FROM information_schema.partitions

WHERE table_schema = SCHEMANAME AND table_name = TABLENAME AND partition_description >= CLOCK;

IF RETROWS = 0 THEN

/*

1. Print a message indicating that a partition was created.

2. Create the SQL to create the partition.

3. Execute the SQL from #2.

*/

SELECT CONCAT( "partition_create(", SCHEMANAME, ",", TABLENAME, ",", PARTITIONNAME, ",", CLOCK, ")" ) AS msg;

SET @sql = CONCAT( 'ALTER TABLE ', SCHEMANAME, '.', TABLENAME, ' ADD PARTITION (PARTITION ', PARTITIONNAME, ' VALUES LESS THAN (', CLOCK, '));' );

PREPARE STMT FROM @sql;

EXECUTE STMT;

DEALLOCATE PREPARE STMT;

END IF;

END$$

DELIMITER ;

DELIMITER $$

CREATE PROCEDURE `partition_drop`(SCHEMANAME VARCHAR(64), TABLENAME VARCHAR(64), DELETE_BELOW_PARTITION_DATE BIGINT)

BEGIN

/*

SCHEMANAME = The DB schema in which to make changes

TABLENAME = The table with partitions to potentially delete

DELETE_BELOW_PARTITION_DATE = Delete any partitions with names that are dates older than this one (yyyy-mm-dd)

*/

DECLARE done INT DEFAULT FALSE;

DECLARE drop_part_name VARCHAR(16);

/*

Get a list of all the partitions that are older than the date

in DELETE_BELOW_PARTITION_DATE. All partitions are prefixed with

a "p", so use SUBSTRING TO get rid of that character.

*/

DECLARE myCursor CURSOR FOR

SELECT partition_name

FROM information_schema.partitions

WHERE table_schema = SCHEMANAME AND table_name = TABLENAME AND CAST(SUBSTRING(partition_name FROM 2) AS UNSIGNED) CREATE_NEXT_INTERVALS THEN

LEAVE create_loop;

END IF;

SET LESS_THAN_TIMESTAMP = CUR_TIME + (HOURLY_INTERVAL * @__interval * 3600);

SET PARTITION_NAME = FROM_UNIXTIME(CUR_TIME + HOURLY_INTERVAL * (@__interval - 1) * 3600, 'p%Y%m%d%H00');

IF(PARTITION_NAME != OLD_PARTITION_NAME) THEN

CALL partition_create(SCHEMA_NAME, TABLE_NAME, PARTITION_NAME, LESS_THAN_TIMESTAMP);

END IF;

SET @__interval=@__interval+1;

SET OLD_PARTITION_NAME = PARTITION_NAME;

END LOOP;

SET OLDER_THAN_PARTITION_DATE=DATE_FORMAT(DATE_SUB(NOW(), INTERVAL KEEP_DATA_DAYS DAY), '%Y%m%d0000');

CALL partition_drop(SCHEMA_NAME, TABLE_NAME, OLDER_THAN_PARTITION_DATE);

END$$

DELIMITER ;

DELIMITER $$

CREATE PROCEDURE `partition_verify`(SCHEMANAME VARCHAR(64), TABLENAME VARCHAR(64), HOURLYINTERVAL INT(11))

BEGIN

DECLARE PARTITION_NAME VARCHAR(16);

DECLARE RETROWS INT(11);

DECLARE FUTURE_TIMESTAMP TIMESTAMP;

/*

* Check if any partitions exist for the given SCHEMANAME.TABLENAME.

*/

SELECT COUNT(1) INTO RETROWS

FROM information_schema.partitions

WHERE table_schema = SCHEMANAME AND table_name = TABLENAME AND partition_name IS NULL;

/*

* If partitions do not exist, go ahead and partition the table

*/

IF RETROWS = 1 THEN

/*

* Take the current date at 00:00:00 and add HOURLYINTERVAL to it. This is the timestamp below which we will store values.

* We begin partitioning based on the beginning of a day. This is because we don't want to generate a random partition

* that won't necessarily fall in line with the desired partition naming (ie: if the hour interval is 24 hours, we could

* end up creating a partition now named "p201403270600" when all other partitions will be like "p201403280000").

*/

SET FUTURE_TIMESTAMP = TIMESTAMPADD(HOUR, HOURLYINTERVAL, CONCAT(CURDATE(), " ", '00:00:00'));

SET PARTITION_NAME = DATE_FORMAT(CURDATE(), 'p%Y%m%d%H00');

-- Create the partitioning query

SET @__PARTITION_SQL = CONCAT("ALTER TABLE ", SCHEMANAME, ".", TABLENAME, " PARTITION BY RANGE(`clock`)");

SET @__PARTITION_SQL = CONCAT(@__PARTITION_SQL, "(PARTITION ", PARTITION_NAME, " VALUES LESS THAN (", UNIX_TIMESTAMP(FUTURE_TIMESTAMP), "));");

-- Run the partitioning query

PREPARE STMT FROM @__PARTITION_SQL;

EXECUTE STMT;

DEALLOCATE PREPARE STMT;

END IF;

END$$

DELIMITER ;

DELIMITER $$

CREATE PROCEDURE`partition_maintenance_all`(SCHEMA_NAME VARCHAR(32))

BEGIN

CALL partition_maintenance(SCHEMA_NAME, 'history', 90, 24, 14);

CALL partition_maintenance(SCHEMA_NAME, 'history_log', 90, 24, 14);

CALL partition_maintenance(SCHEMA_NAME, 'history_str', 90, 24, 14);

CALL partition_maintenance(SCHEMA_NAME, 'history_text', 90, 24, 14);

CALL partition_maintenance(SCHEMA_NAME, 'history_uint', 90, 24, 14);

CALL partition_maintenance(SCHEMA_NAME, 'trends', 730, 24, 14);

CALL partition_maintenance(SCHEMA_NAME, 'trends_uint', 730, 24, 14);

END$$

DELIMITER ;

mysql -uzabbix -pzabbix zabbix /tmp/partition.log

#第一次可以设置1分钟执行1次,然后查看日志文件是否允许正常

mysql> show create tables history_uint;

ERROR 1064 (42000): You have an error in your SQL syntax; check the manual that corresponds to your MySQL server version for the right syntax to use near 'tables history_uint' at line 1

mysql>

mysql> show create table history_uint;

+--------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Table | Create Table |

+--------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| history_uint | CREATE TABLE `history_uint` (

`itemid` bigint(20) unsigned NOT NULL,

`clock` int(11) NOT NULL DEFAULT '0',

`value` bigint(20) unsigned NOT NULL DEFAULT '0',

`ns` int(11) NOT NULL DEFAULT '0',

KEY `history_uint_1` (`itemid`,`clock`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin

/*!50100 PARTITION BY RANGE (`clock`)

(PARTITION p202104100000 VALUES LESS THAN (1618070400) ENGINE = InnoDB,

PARTITION p202104110000 VALUES LESS THAN (1618156800) ENGINE = InnoDB,

PARTITION p202104120000 VALUES LESS THAN (1618243200) ENGINE = InnoDB,

PARTITION p202104130000 VALUES LESS THAN (1618329600) ENGINE = InnoDB,

PARTITION p202104140000 VALUES LESS THAN (1618416000) ENGINE = InnoDB,

PARTITION p202104150000 VALUES LESS THAN (1618502400) ENGINE = InnoDB,

PARTITION p202104160000 VALUES LESS THAN (1618588800) ENGINE = InnoDB,

PARTITION p202104170000 VALUES LESS THAN (1618675200) ENGINE = InnoDB,

PARTITION p202104180000 VALUES LESS THAN (1618761600) ENGINE = InnoDB,

PARTITION p202104190000 VALUES LESS THAN (1618848000) ENGINE = InnoDB,

PARTITION p202104200000 VALUES LESS THAN (1618934400) ENGINE = InnoDB,

PARTITION p202104210000 VALUES LESS THAN (1619020800) ENGINE = InnoDB,

PARTITION p202104220000 VALUES LESS THAN (1619107200) ENGINE = InnoDB,

PARTITION p202104230000 VALUES LESS THAN (1619193600) ENGINE = InnoDB) */ |

+--------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

1 row in set (0.01 sec)

在web界面,管理—-一般—-设置—管家,取消历史、趋势标识的管家功能。

安装随记

./configure --prefix=/usr/local/zabbix --enable-server --enable-agent --with-mysql=/usr/local/mysql/bin/mysql_config --enable-ipv6 --with-net-snmp --with-libcurl --with-openssl --with-libxml2

#编译参数新增--with-libxml2,为了监控VMware vSphere主机做准备

create database zabbix character set utf8 collate utf8_bin;

#创建数据库使用utf8_bin编码

#zabbix_server.conf的DBSocket参数需要填写

参考:

https://blog.csdn.net/qq_22656871/article/details/110237755

https://wsgzao.github.io/post/zabbix-mysql-partition/

https://www.cnblogs.com/yjt1993/p/10871574.html